In the high-stakes race toward full autonomy, the prevailing industry wisdom has long championed a “more is better” approach to vehicle sensors, creating a complex digital nervous system from a fusion of cameras, radar, and LiDAR. This multi-modal strategy is built on the principle of redundancy, where the strengths of one sensor type compensate for the weaknesses of another—radar excels in poor weather where cameras struggle, while LiDAR provides unparalleled 3D environmental mapping. Yet, amidst this consensus, Tesla has made a bold and polarizing departure, systematically dismantling this sensor fusion framework in favor of a singular reliance on vision. By betting the future of its autonomous systems on cameras and a sophisticated neural network alone, the company is not just challenging industry norms but is fundamentally redefining the path to achieving a self-driving future, a move that is either a stroke of genius or a monumental miscalculation. This strategic pivot forces the automotive world to reconsider the very nature of perception and whether mimicking human vision is the most direct, or most perilous, route to replacing the human driver.

The Rationale Behind a Singular Focus

The decision to abandon multi-sensor systems was driven by a core engineering philosophy aimed at solving a problem known as “sensor contention.” This issue arises when data from different sensors, such as radar and cameras, provide conflicting information about the driving environment. Instead of creating a clearer picture, these discrepancies can introduce ambiguity and hesitation into the vehicle’s decision-making process. A frequently cited example involves radar’s difficulty in accurately distinguishing between a harmless overhead sign on a highway and a genuine obstacle on the road, or a stationary vehicle on the shoulder versus a direct threat. This ambiguity historically led to incidents of “phantom braking,” where the vehicle would brake unexpectedly and unnecessarily, creating a jarring and potentially unsafe experience for occupants. According to Tesla’s leadership, relying on vision forces the system to solve these complex edge cases through software and neural network intelligence, much like a human driver does, rather than attempting to reconcile the conflicting outputs of fundamentally different hardware systems. The company contends that true autonomy cannot be achieved until the vision system is powerful enough to interpret the world on its own.

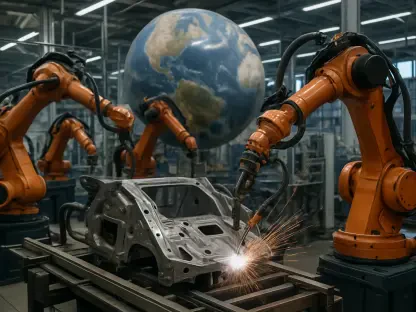

This commitment to a vision-only architecture is now fully realized across Tesla’s entire vehicle lineup, marking a definitive technological fork in the road for the company. Every new vehicle produced today relies exclusively on a suite of eight cameras and a powerful onboard computer to run its “Tesla Vision” system. This hardware array provides a 360-degree view around the car, feeding immense amounts of visual data into a neural network that is continuously trained to interpret complex road scenarios, identify objects, and predict behaviors. Underscoring the depth of this conviction, the company has deliberately chosen not to activate the high-definition radar installed in its newer, premium Model S and Model X vehicles for any Full Self-Driving (FSD) functionalities. This move sends a clear message that the hardware is considered a legacy component, effectively rendered obsolete by the advancements in their vision processing. By creating this hard dependency on cameras, Tesla is wagering that it can accelerate the development of a more scalable and cost-effective autonomous solution, one that avoids the high costs and supply chain complexities associated with technologies like LiDAR.

A Calculated Risk with Industry-Wide Implications

Tesla’s singular approach to autonomy presents a high-risk, high-reward scenario that continues to be a central point of debate within the autonomous vehicle sector. On one hand, if the company successfully masters computer vision to a degree that matches or exceeds the reliability of multi-sensor systems, it could gain a formidable competitive advantage. Such a breakthrough would not only validate its engineering philosophy but also significantly lower the production cost and complexity of its vehicles, making advanced driver-assistance features more accessible. This cost efficiency could create a powerful moat, making it difficult for competitors reliant on expensive LiDAR and radar units to compete on price for similar levels of functionality. Furthermore, a vision-based system that truly understands its environment in a human-like way could potentially handle “long-tail” edge cases with more nuance than a system that simply fuses disparate data points. Success would prove that the most direct path to artificial general intelligence in driving is through a focused, software-centric approach rather than a hardware-heavy one.

On the other hand, the potential pitfalls of this strategy are significant and frequently highlighted by critics and competitors. The inherent limitations of cameras—such as reduced performance in adverse weather conditions like heavy rain, snow, or fog, as well as susceptibility to glare and low-light situations—remain a primary concern. Competitors argue that forgoing the robustness provided by radar and the precision of LiDAR is an unnecessary gamble with safety. While neural networks can be trained to infer depth and velocity from 2D images, this is an incredibly complex computational task that may never fully replicate the direct, physical measurements provided by other sensors. If Tesla’s vision system fails to consistently overcome these challenges, it could lag behind competitors in terms of all-weather reliability and overall safety metrics. The industry continues to watch closely, as the outcome of Tesla’s vision-only experiment will likely have profound and lasting consequences, shaping the technological direction and regulatory landscape for autonomous vehicles for years to come.

The Path Forged

The strategic decision to pursue a vision-only path for autonomy represented a fundamental split from the industry’s established course. This approach challenged the deeply held belief that sensor redundancy was an absolute requirement for safety and reliability in self-driving systems. By focusing exclusively on advancing its neural networks to interpret visual data with human-like acuity, the company aimed to create a more streamlined and scalable solution. This journey involved overcoming significant engineering hurdles related to perception in challenging environmental conditions and developing software capable of navigating the immense complexity of real-world driving. The outcome of this ambitious venture ultimately provided the automotive world with critical insights into the capabilities and limitations of artificial intelligence in the physical world, influencing future development roadmaps for autonomous technology across the entire industry.