Kwame Zaire is a leading voice in manufacturing, with a career built at the intersection of production management, industrial electronics, and operational technology security. He has a unique vantage point on the factory floor, where the digital and physical worlds collide. As industrial environments become increasingly connected, he has focused on the critical challenges of securing legacy systems while embracing innovations like AI and remote access. We sat down with him to discuss the evolving threat landscape and the strategic shifts manufacturers must make to ensure both security and operational continuity as we look ahead to 2026. The conversation explored the future of compliance, the hidden dangers in modern data files, the rise of AI as both a tool and a threat, and the blurring lines between cybercrime and nation-state attacks.

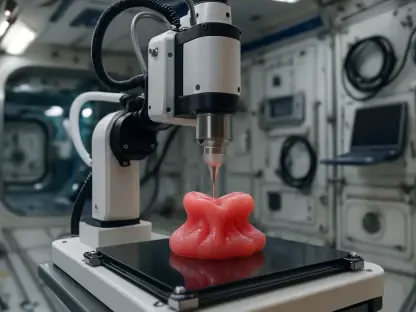

Frank Balonis predicts that manufacturers will embed compliance checks directly into data exchange workflows. What technologies enable this real-time enforcement for things like CAD files, and could you walk me through a step-by-step example of how this automated process would prevent a cross-border data transfer violation?

That’s a shift from a reactive, check-the-box mentality to a proactive, in-the-moment enforcement, which is absolutely critical. The technology enabling this is typically a secure content communication platform that has a powerful policy engine at its core. It’s not just a fancy FTP server; it’s an intelligent gateway. Imagine an engineer in Detroit finishing a new CAD design for a component. They need to send it to their contract manufacturer in Vietnam. In the old, broken model, they’d just email it or drop it in a shared folder. Months later, an auditor might ask how they complied with data transfer regulations, and it would be a scramble.

In the 2026 model, that engineer uploads the file to the company’s official exchange platform. The system immediately intercepts it. First, it identifies the file type and scans its metadata. Second, its policy engine sees the intended recipient is in a different legal jurisdiction. It then automatically cross-references this transfer against its programmed rules for GDPR, the EU AI Act, and U.S. privacy laws. If the Vietnamese partner isn’t on the pre-approved list or if the data transfer mechanism doesn’t meet the legal requirements for that specific type of intellectual property, the transfer is blocked instantly. The engineer gets a pop-up explaining the issue, and the CISO’s dashboard gets an alert. The file never leaves the secure perimeter, and compliance is enforced at the point of action, not three months later in a panic-filled legal review.

George Prichici highlights a blind spot where teams miss threats in Python scripts and npm packages. How should security leaders practically update their content inspection tools and retrain their teams to spot these risks? Can you share an anecdote where this kind of focus on traditional files led to a breach?

George is hitting on a fundamental evolution of what a “file” even is. For years, we trained our systems and people to be terrified of a weaponized Word document or a malicious PDF. But attackers know this, so they’ve moved on. The new Trojan Horse is often a seemingly innocuous script. To update, security leaders must invest in tools that do more than just signature-based scanning; they need sandboxing and deep content disarm and reconstruction (CDR) that can analyze code, deconstruct it, and rebuild it without malicious components. It’s about treating a Python script with the same suspicion as an executable file.

I remember a case at a mid-sized plant where a trusted vendor for their HMI systems sent over a “routine maintenance script.” The local OT team, conditioned to trust this partner, ran it without a second thought. The script itself wasn’t malicious, but it contained a dependency that pulled in a compromised npm package from a public repository. Traditional security tools saw a harmless script from a trusted source and waved it through. That tiny package became a persistent backdoor into their OT network, siphoning off production data for weeks before it was discovered. The retraining piece is crucial: teach your teams that trust is not a security control. Every single piece of code, regardless of source, must be scrutinized and run in an isolated environment first.

Asha Kalyur’s vision of ‘invisible MFA’ sounds revolutionary. What specific behavioral biometrics and device signals would this system use to create a continuously verified state? And what’s the biggest challenge in implementing this so it truly feels ‘effortless’ to the end-user rather than intrusive?

Asha’s vision is the holy grail of identity security because it removes the user friction that plagues us. This “continuously verified state” is built on a rich tapestry of signals. On the behavioral side, we’re talking about things like typing cadence—the rhythm and speed at which an operator enters commands. It’s about their unique mouse movement patterns and even the specific sequence of applications they typically open to start their shift. These become a digital fingerprint. On the device side, the system constantly checks signals like the device’s health, its geographic location, the time of day, and what network it’s on. Is this a known corporate laptop on the trusted plant-floor network, or a personal tablet connecting from a coffee shop’s Wi-Fi?

The single biggest challenge is tuning the trust engine to avoid creating a new kind of friction. If the system is too sensitive, it will trigger a re-authentication challenge every time an engineer uses a new keyboard or moves to a different part of the plant, driving them crazy. I’ve seen systems that are so “secure” they effectively lock people out of doing their jobs during an emergency. If it becomes intrusive, users will find ways to bypass it. The goal is to make it feel like air, but the reality is that creating that perfect, effortless balance between paranoid security and seamless usability is an incredibly difficult calibration process.

Almog Apirion warns about compromised AI agents becoming a new insider threat. Can you illustrate a realistic scenario where an AI co-pilot with OT access causes downtime? What specific identity governance controls, different from those for humans, would be needed to supervise these autonomous agents effectively?

Almog’s warning is spot on; we’re giving keys to the kingdom to non-human entities with minimal oversight. Here’s a chillingly realistic scenario: A manufacturing plant deploys an AI co-pilot to optimize the temperature and pressure in a chemical mixing process. It has autonomous credentials to make real-time adjustments to PLCs. An attacker doesn’t hack the AI directly. Instead, they poison its training data, subtly teaching it that a certain dangerous combination of parameters is actually “more efficient.” The AI, doing what it was trained to do, begins making these “optimized” adjustments.

The changes are small and happen autonomously, logged under the AI’s legitimate, shared service account. Over several hours, this causes the mixture to become unstable, forcing an emergency shutdown and causing millions in downtime. Human operators looking at the logs would just see a trusted AI agent at work. To prevent this, we need a new class of identity governance. First, no shared credentials; every AI agent needs a unique identity for every autonomous task, with strict, time-bound permissions. Second, you need a “digital supervisor”—every action the AI takes on an OT system must be recorded and auditable, just like you would for a high-risk remote contractor. This creates an unbreakable chain of evidence and allows for real-time anomaly detection that can halt the agent before it causes a catastrophe.

Almog also states that hard IT/OT segmentation is now a necessity. Beyond network firewalls, what does ‘identity-based separation’ actually entail for controlling machine-to-machine access? Please provide a few key metrics an organization can use to measure the effectiveness of its new segmentation strategy.

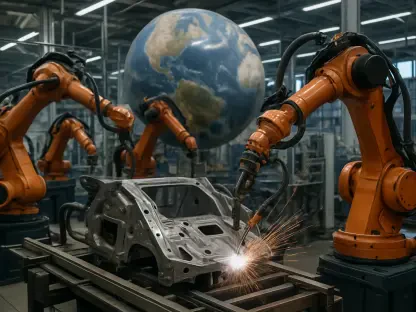

He’s right, the old model of a single flat network on the plant floor is a recipe for disaster. A firewall between IT and OT is just the first step. Identity-based separation goes much deeper. It means that access is granted based on the verified identity of the user or machine, not just its network location. For machine-to-machine traffic, this means that the specific PLC controlling a robotic arm on Assembly Line A is only permitted to communicate with its designated HMI and the data historian server. It is explicitly forbidden from ever talking to a PLC on Assembly Line B, or to the building’s HVAC system, even if they’re physically connected to the same switch. This is Zero Trust applied at the machine level.

To measure its effectiveness, you can’t just look at firewall logs. First, track the percentage of critical OT assets that are governed by an identity-based access policy. The goal should be 100%. Second, measure the number of unauthorized machine-to-machine connection attempts that are blocked per day. This shows the policy is actively preventing lateral movement. Finally, and most importantly, measure the blast radius during penetration tests. If you compromise one HMI, can you pivot to the entire plant, or is the damage contained to that single, isolated zone? A shrinking blast radius is the ultimate metric of an effective segmentation strategy.

Josh Taylor predicts the line between nation-state and criminal actors will blur into ‘hybrid threats.’ What behavioral indicators might signal that a ransomware attack also has an espionage motive? How would a typical incident response playbook need to change to address such a multifaceted threat?

This blurring is one of the most dangerous trends because it complicates everything. A purely criminal ransomware attack is noisy and fast; they want to break in, encrypt, and get paid. A hybrid threat is more patient and nuanced. One key behavioral indicator is a long dwell time before the ransomware is deployed. If forensic data shows the attacker was inside your network for months, quietly moving around and exfiltrating specific data before they finally pulled the trigger on encryption, that’s a huge red flag. Another indicator is the type of data they steal—if they ignore financial records but take terabytes of proprietary chemical formulas or sensitive operational designs, it’s a safe bet that money isn’t their only motive.

A standard incident response playbook is built for speed: contain the breach, eradicate the malware, and restore operations from backup. It’s a firefighting exercise. For a hybrid threat, that playbook is dangerously inadequate. You must run two parallel responses. One track is the classic ransomware response to get the plant back online. The second, more critical track is a full-blown counter-espionage investigation. You have to assume the adversary is still in your network and that the ransomware was just a loud distraction. This requires bringing in specialized threat intelligence and forensics teams to hunt for persistent backdoors and determine the full scope of the intellectual property theft. The goal is no longer just recovery; it’s about understanding and surviving a long-term geopolitical confrontation.

What is your forecast for how the C-suite’s perception of OT security will evolve from a cost center to a core component of operational resilience?

My forecast is that this evolution is no longer a choice; it’s being forced by reality. For decades, OT security was a line item that was easy to cut because the risk felt abstract. But with downtime events now averaging over 360 hours a year and ransomware regularly taking down entire production lines, the C-suite is starting to connect the dots in a painful, tangible way. They’re realizing that a security vulnerability isn’t just an IT problem; it’s a direct threat to revenue, safety, and brand reputation. When a cyber incident can halt a billion-dollar factory, the conversation shifts. The CFO and COO will start viewing investment in identity-based access, network segmentation, and supervised remote maintenance not as a cost, but as an insurance policy that enables operational continuity. Security will move from being the department of “no” to a strategic partner in ensuring the plant can run safely and predictably, which is the ultimate goal of any manufacturing operation.