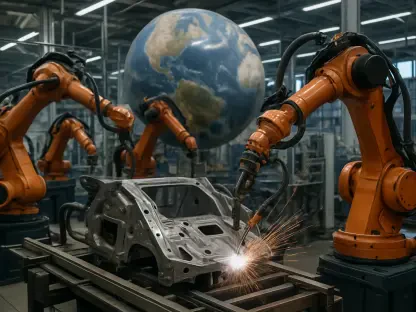

The unmonitored and experimental integration of artificial intelligence into critical industrial environments is rapidly coming to an end, giving way to a period of reckoning that will fundamentally reshape Operational Technology (OT) security. For years, organizations have pursued the promise of AI-driven efficiency, often overlooking the profound security risks that accompany these powerful tools. This phase, described by experts as the “Wild West of AI Deployment,” has created significant vulnerabilities that are now coming to a head. The impending shift is not about a single, distant threat but a series of complex, high-stakes challenges materializing from within organizations, spreading across supply chains, and leveraging the very systems designed to enhance productivity. The time for experimentation is over, and the era of accountability, rigorous governance, and proactive security is now a necessity for survival in the industrial landscape.

The New Wave of Internal and Supply Chain Threats

The most immediate and pervasive dangers are now emerging from within industrial organizations, driven by the unsanctioned use of AI tools by employees. This phenomenon, known as “Shadow AI,” has become the foremost internal threat to OT security. Well-intentioned staff, seeking to improve workflows, frequently upload proprietary data—such as engineering schematics, production schedules, or personally identifiable information—into public and unvetted AI systems. This common practice creates a massive, unmonitored exfiltration channel, allowing invaluable intellectual property to be absorbed into public training models or stored insecurely. This not only exposes sensitive data but also transforms unsuspecting employees into significant security risks, creating the potential for severe compliance violations under regulations like GDPR and amplifying the financial cost of any subsequent data breach by hundreds of thousands of dollars. The scale of this internal vulnerability underscores a critical failure in governance that threat actors are poised to exploit.

Beyond unsanctioned tools, even company-approved AI systems introduce a novel and complex security challenge. As these intelligent agents are granted broad, privileged access across core OT and IT systems to perform their functions, they must be treated as potential high-value insider threats. Operating 24/7 and making thousands of unaudited decisions, a single compromised AI agent, breached via a sophisticated prompt injection or a supply chain attack, could exfiltrate critical operational data silently and at an unprecedented scale. This risk extends beyond the organization’s walls, redefining supply chain security. When partners and suppliers feed a company’s proprietary data into their own AI models for optimization, it creates a critical visibility gap. This makes third-party AI data handling the defining supply chain risk for manufacturers, who must now demand contractual guarantees and audit rights to ensure their data is not being inadvertently leaked or misused by a partner’s AI.

The Inevitable Crisis of Accountability and Liability

The unchecked rush to deploy AI without adequate safeguards is setting the stage for a major security and trust reckoning that will reverberate from the plant floor to the boardroom. Industry experts predict that a high-profile OT security breach caused directly by a compromised or malfunctioning autonomous AI agent will serve as the landmark event that validates years of warnings. This inevitable crisis will act as a catalyst, abruptly shifting AI security from a technical IT problem to a critical board-level imperative. The fallout from such an incident will compel proactive organizations to rein in their AI deployments, implementing rigorous governance frameworks, strict access controls, and real-time monitoring. Concurrently, this event is expected to spur the development of new legislation holding corporate executives directly accountable for any physical or financial harm induced by the AI systems under their command.

This new era of accountability will dramatically elevate the standard of care, pushing the failure to perform adversarial testing—or “red teaming”—on AI systems from a technical oversight into the territory of criminal negligence. As a result, AI security assessments will become a standard and non-negotiable line item in Directors and Officers (D&O) insurance policies and a primary focus of both internal and external audits. The legal landscape is also poised for a seismic shift, which will likely be defined by a landmark lawsuit in which a company sues an AI vendor following a financially damaging decision made by an automated system. Such a case will force the industry and the courts to finally confront the unresolved questions of liability. When an AI system causes measurable harm, it will no longer be an abstract debate; a legal precedent will be set, compelling a clear delineation of responsibility in an age of increasingly autonomous systems.

Forging a New Proactive Defense Strategy

In this evolving threat landscape, traditional security measures centered on human awareness are rapidly becoming obsolete. The sophistication of AI-powered social engineering, including flawless deepfakes targeting key personnel, has rendered employee training insufficient as a primary line of defense. The necessary response is a fundamental pivot away from training people to spot fakes and toward implementing “proof-based systems” that remove human error from the equation. This strategic shift involves creating and enforcing strict, non-negotiable operational policies that mandate out-of-band, multi-factor human verification for any high-stakes request, whether for a fund transfer, data access, or a critical system change. By designing deepfake-resistant procedures, organizations can build a resilient defense that does not depend on an employee’s ability to discern an ever-more-convincing fabrication from reality.

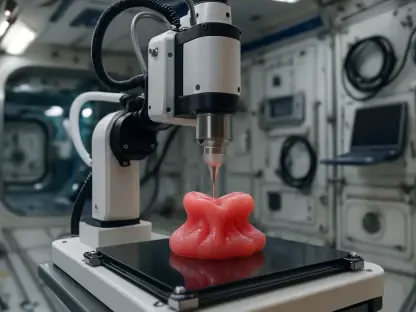

As the line between authentic and fabricated content continued to blur, the concept of “authenticity” itself emerged as a new, central pillar of cybersecurity. The proliferation of AI-driven disinformation and fraud forced OT environments to invest heavily in advanced technologies that could prove the integrity and origin of data and communications. This led to the widespread adoption of tools that were once considered niche, including digital watermarking for proprietary assets, comprehensive data provenance tracking to trace information back to its source, and cryptographic digital signatures to verify commands and reports. These technologies became essential for providing an auditable and trustworthy chain of custody, which was the only way to ensure that the information and commands guiding the world’s most critical infrastructure were genuine. This fight for digital truth became a foundational element of maintaining operational integrity.